Reactive Architecture. Theoretical Dive into Blocking Architecture

Business needs first

Goals

The primary objective for every software engineer is not to provide solutions with the latest modern library or architecture, the main goal is to help businesses to solve their problems. To achieve this software engineers need to provide solutions that will efficiently help to solve business problems with minimum errors and optimum costs that will help businesses be profitable in the end. This goal is critical and directly informs how we allocate our resources and design our systems.

With current globalization and business orientation to the whole world no one is surprised these days with 10k requests from geo-distributed customers and our common challenges now are:

- Scaling to handle a substantial number of simultaneous requests efficiently.

- Complex business workflows with robust backend systems and managing inter-service dependencies effectively.

- Global user base with varying network conditions, while maintaining consistent performance across regions. Given the current challenges, the solution must be designed to be easily scaled, resilient, and have low-latency performance, geo-distributed

Modern Architectural Best Practices

Modern architecture should be leveraging the following principles (*1):

- Loosely Coupled Components. To reduce dependencies to enable independent evolution, testing, and scaling.

- Microservices based Design. To enable horizontal scalability, allowing individual services to scale independently based on demand.

- Geo-Distributed Deployments. To deploy services closer to customers in several geographical regions to reduce latency and improve availability.

- Fault Tolerance. To remain service operational even if one of the component failures, such as server outages or data center failures. To solve these challenges our architecture must be robust, adaptive, scalable, fault-tolerant, and geo-distributed.

Architecture patterns

In this article, we will not cover all architectures and principles to build good and solid solutions or dig them very deep. I wanted to focus on the service communication aspect, but we need to go to the high level over the most popular architectural patterns. If you want you could follow the links to read more details about it.

We could concentrate on two core architectural patterns (*2)

- Monolithic Architecture

- Microservice Architecture

Monolithic Architecture

The application is built as a single project with all services/components. All components such as business logic, data access, and user interface are connected and dependent and run in a single process and share memory. (*3)

Pros: Simple to develop and deploy, and test.

Cons: Difficult to scale and maintain because highly coupled components make it difficult to update or modify parts of the system.

Microservices Architecture

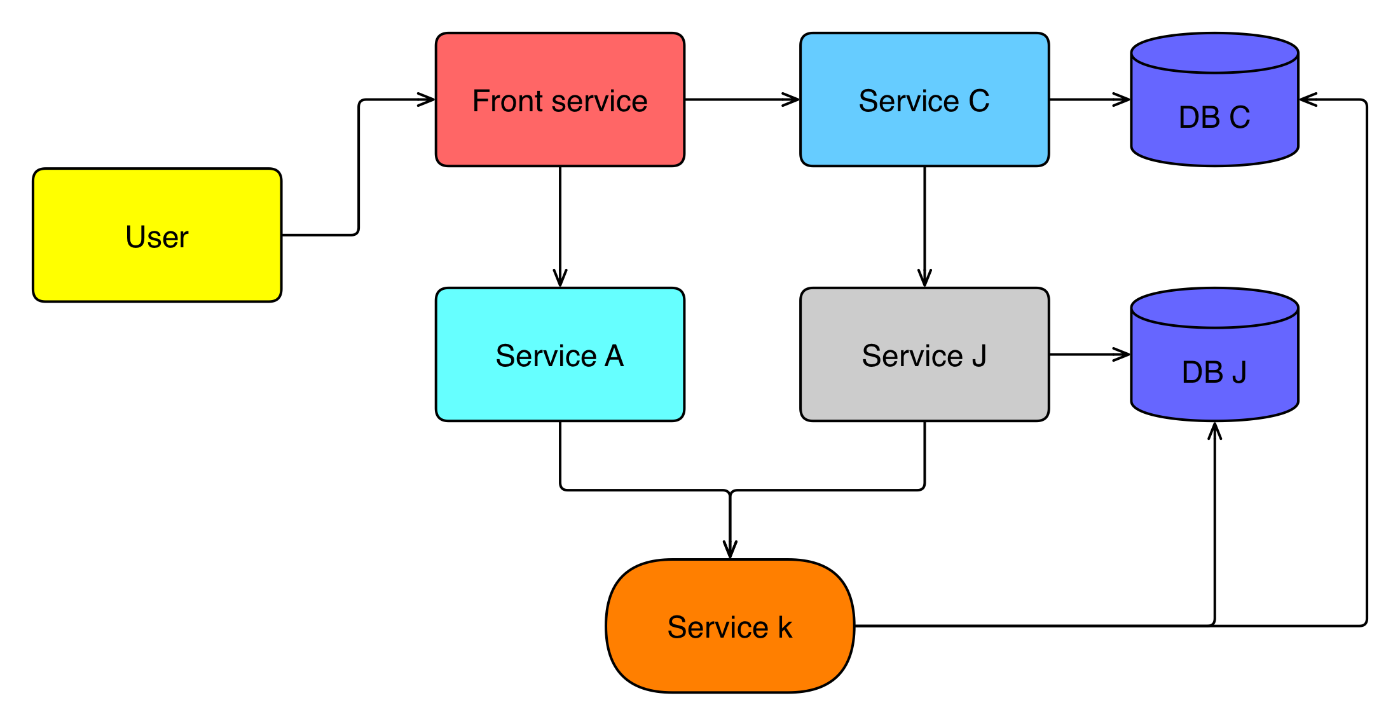

The application is split into smaller independent applications grouped by business components/services. Each service is focused on a specific business functionality and communicates with other services via remote APIs. (*4)

Pros: Scalability, and ease to maintenance because each service can be developed, deployed, and scaled independently.

Cons: Increased complexity in service management and service-service communication can become a bottleneck and require a sophisticated deployment setup.

Service Communication Patterns

Service communication patterns define how services/applications interact with each other and there are two basic patterns (*5)

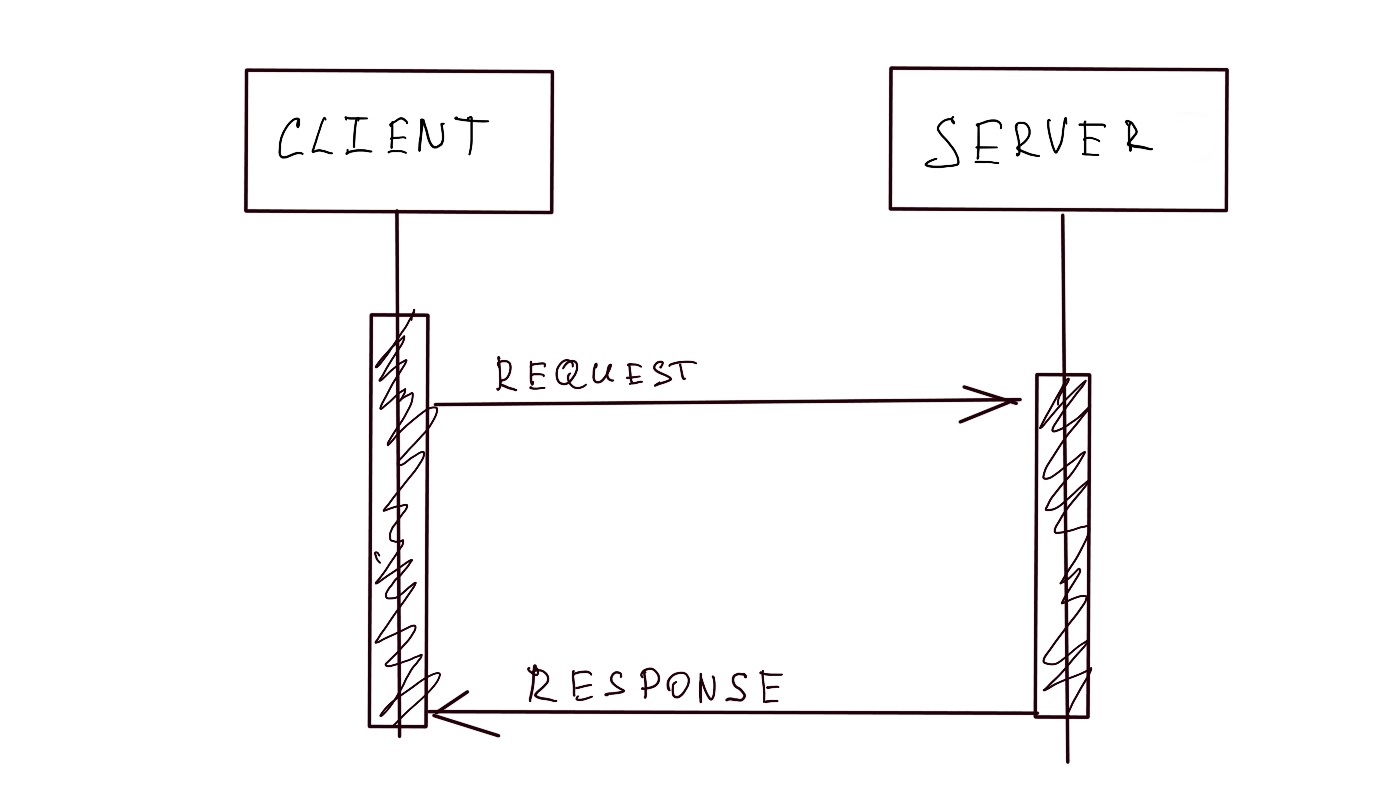

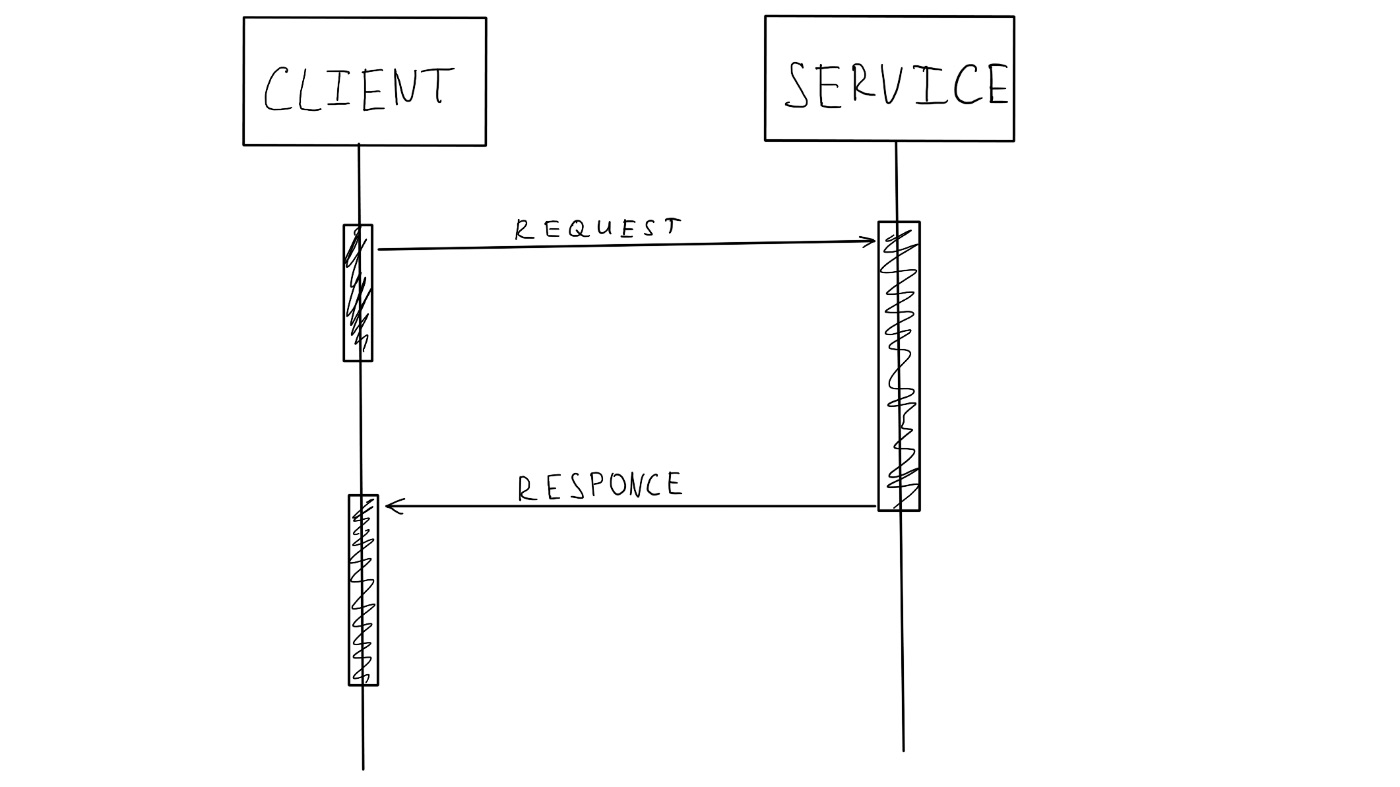

Synchronous Pattern

In this pattern, a service calls an API that another service exposes, using a protocol such as HTTP or gRPC, and waits for a response from the remote service.

Asynchronous Pattern

In this pattern, a service sends a request without waiting for a response, and one or more services process the message asynchronously.

Summary

These communication patterns provide base strategies for systems. Using a specific pattern depends on the system’s requirements, such as scalability, responsiveness, and resource management, as well as its constraints and domain-specific needs.

Practice

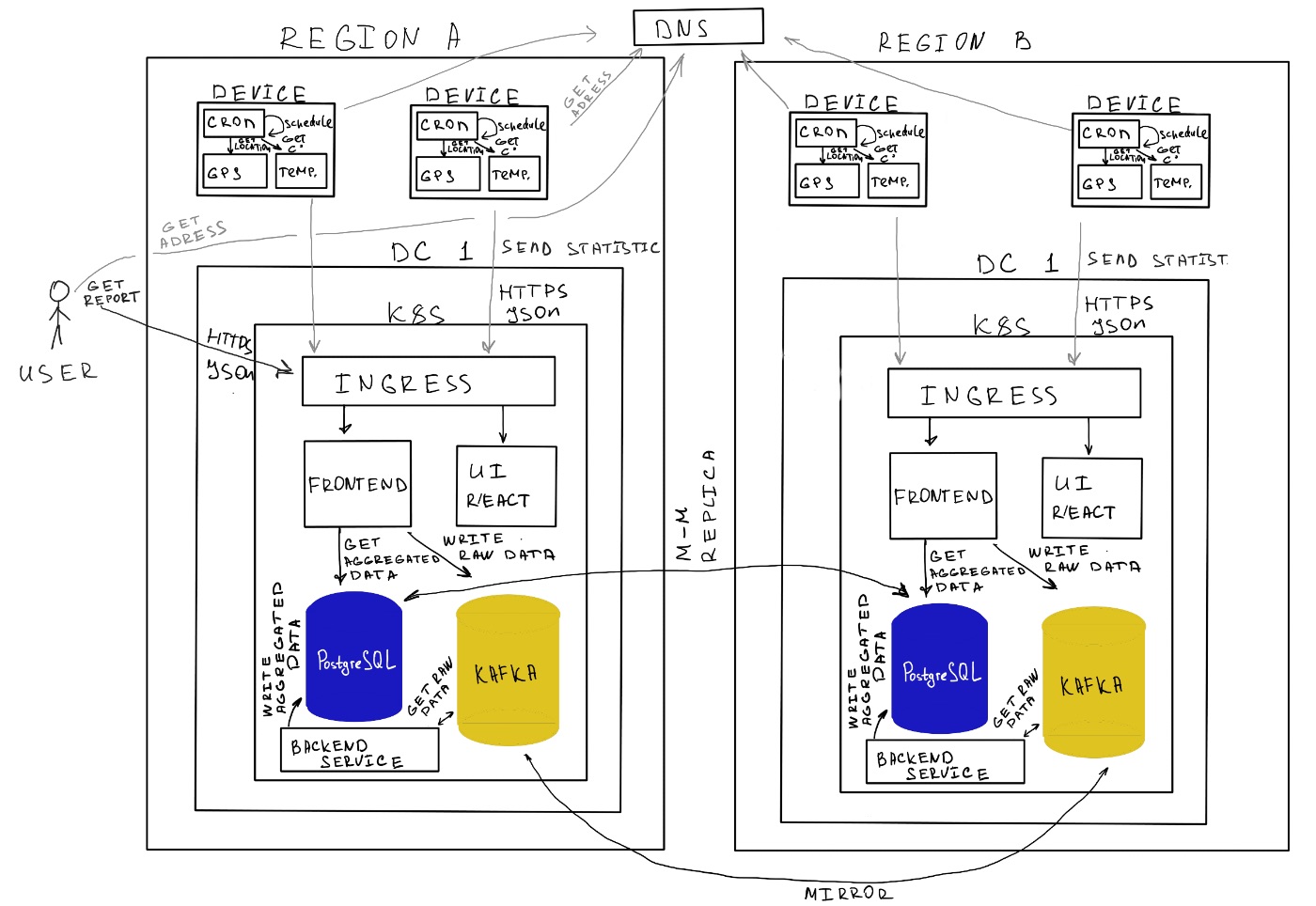

Artificial Challenge. Design an Architecture for Global Temperature Monitoring System

Background:

We are deploying temperature monitoring devices worldwide. Each device comprises a standard PC connected to the internet and equipped with:

- Temperature Sensor: Provides real-time temperature readings.

- GPS Tracker: Offers current geographic location data.

Note: Internet connectivity and speed are unstable and can fluctuate based on location and time.

Requirements:

Data Collection:

- Collect temperature readings from all devices globally.

- Ensure data is accurately associated with each device’s location.

Data Visualization:

- Display real-time temperature data on a global map based on device locations.

Data History:

- Maintain a historical log of temperature readings for each individual device.

- Enable retrieval and analysis of historical data per device.

System Reliability:

- Design the service to be fault-tolerant.

- Guarantee data delivery despite unreliable internet connections.

- Implement mechanisms to handle data buffering and retransmission as needed.

Objective:

Develop a comprehensive architecture that addresses the above requirements. The solution should consider scalability, reliability, and efficiency, ensuring seamless operation across diverse geographic locations with varying network conditions.

Solution

In most cases, if you ask someone to sketch the architectural design of an application, you’re likely to get a similar diagram.

In this example, we will not cover solutions with Monolithic architecture

Problems

Microservice architecture became more popular and replaced monolithic architecture due to its scalability, flexibility, and alignment with modern software development practices. However, it also has a lot of problems. (*6), (*7)

Complexity of Development and Deployment

Managing multiple independent services that can have their own technology stack, development lifecycle, and deployment pipeline could lead to problems with coordination and consistency in development and deployment.

Data Management Challenges

In a microservices architecture, each service typically manages its database (not always a separate instance). This leads to problems in maintaining data consistency, especially across distributed transactions.

Distributed System Complexity

Microservices architectures are distributed systems, which means that such systems will have issues such as network latency, partial failures, and problems in service communication.

Service Dependency Management

Microservices are dependent on each other, and in most cases it is not Service A that relies on Service B, The number of services could be hundreds, and they could be represented in Cyclic Graph. Managing such systems/dependencies during deployment and updates can be challenging.

Communication Overhead

Microservices communicate over a network, typically using HTTP or messaging queues. This adds significant communication overhead and can lead to performance bottlenecks.

Testing Complexity

Testing a microservices-based application is more complicated than testing a monolithic application.

Increased Resource Consumption

Each microservice typically runs in its process with its environment, often in its own container or virtual machine. This can lead to increased resource consumption compared to a monolithic application, which is the root cause of higher infrastructure costs.

Complexity in Managing Failures

In a distributed system like microservices, mindful handling failures becomes more complex.

In this article, we’ll concentrate on communication problems.

Understanding Blocking

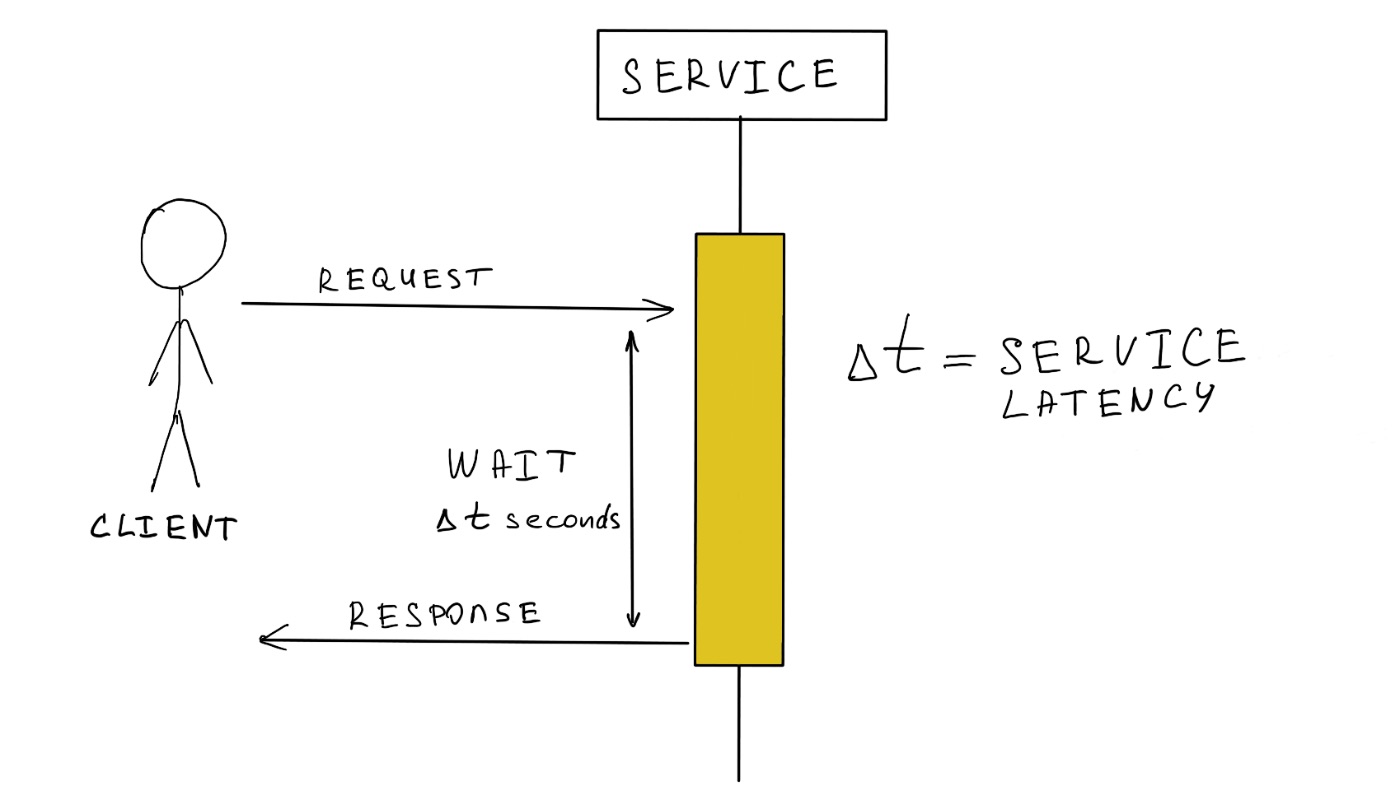

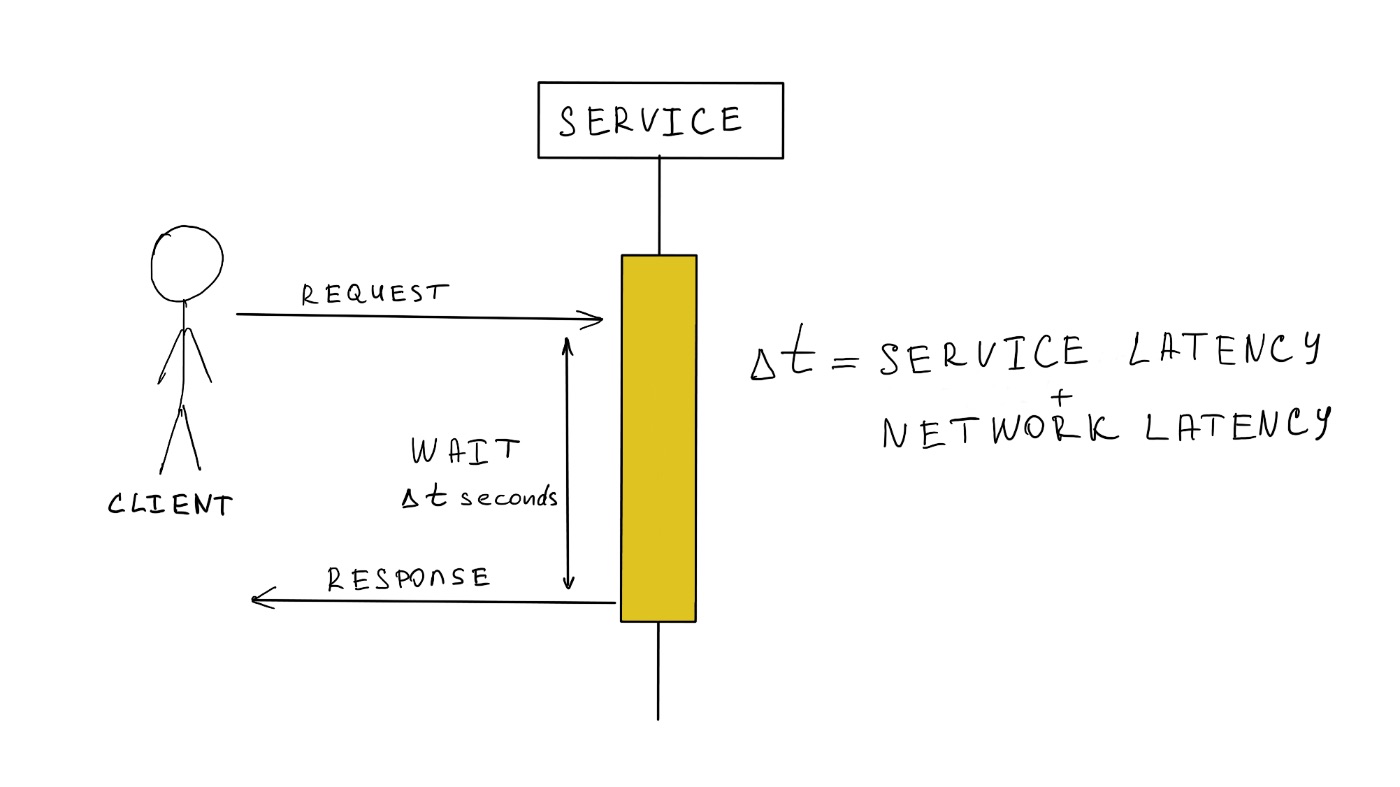

A blocking operation/call is where the underlying system “stop” or “block" execution until the operation completes (*8). During this period, the thread cannot perform any other compute tasks.

Common examples of blocking operations:

- I/O Operations. Reading from or writing to a disk, database, or network socket. (*9)

- Waiting for a Lock. When a thread is trying to acquire a lock on a resource that is currently held by another thread. (*10)

How Blocking Operations Affect Performance

Blocking operations can significantly impact performance, especially in applications that have high concurrency. Let’s focus on the main problems.

Resource Underutilization

When a thread is blocked, it does nothing until the operation completes. If multiple threads are blocked simultaneously, it can lead to underutilization of the system CPU and memory resources.

Scalability Issues

For applications that need to handle many concurrent operations (like web servers to handle multiple client requests), blocking operations can quickly become a bottleneck. If each request blocks the thread handling it, the server can run out of available threads, leading to slow response times or even application failure with Gateway Timeout etc.

Increased Latency

Blocking operations/calls can increase the time to complete a main task, particularly if the blocked operation is slow (like a network request to another service or DB). This latency can degrade the user experience in real-time applications.

Summary

Blocking operations could impact the performance, scalability, and latency of an application. With an understanding of where and how blocking operations occur, software engineers can avoid main problems by using non-blocking alternatives. Better architecture leads to better resource utilization, reduced latency, improved overall system performance, and also decreased resource costs.

What is Network Blocking?

Network blocking operations/calls are the same common blocking operation/call that we discussed in the previous section but they are related to network IO operations. Network blocking occurs when a thread is paused while waiting for a network communication to complete. This situation typically could happen on network requests (for example HTTP request, DB query or any remote communication) and waits for the response before continuing its execution. (*11)

Network blocking has a higher impact on the application performance due to overhead on network communication. I will not cover all performance issues and benchmark comparisons but if you are interested you could check these articles:

https://www.geeksforgeeks.org/performance-of-a-network/

https://www.baeldung.com/linux/ipc-performance-comparison

https://3tilley.github.io/posts/simple-ipc-ping-pong/

Conclusion

Designing a modern architecture requires efficiency, scalability, and reliability. By understanding the impact of blocking operations and utilizing the best modern architectural practices such as microservices, asynchronous communications, and fault-tolerant designs, we can design systems that can address high-load, complex business workflows.

Modern architecture, with its use of non-blocking operations and asynchronous communications, can offer a more powerful solution than traditional blocking systems. By adhering to these principles, you can ensure resiliency, agility, and the ability to deliver services to customers even in the face of a variable network and high demand.

Links:

- https://www.lucidchart.com/blog/how-to-design-software-architecture

- https://www.simform.com/blog/software-architecture-patterns

- https://en.wikipedia.org/wiki/Monolithic_application

- https://en.wikipedia.org/wiki/Microservices

- https://learn.microsoft.com/en-us/azure/architecture/microservices/design/interservice-communication

- https://developer.ibm.com/articles/challenges-and-benefits-of-the-microservice-architectural-style-part-1/

- https://dev.to/somadevtoo/10-microservices-architecture-challenges-for-system-design-interviews-6g0

- https://en.wikipedia.org/wiki/Blocking_(computing)

- https://www.geeksforgeeks.org/blocking-and-nonblocking-io-in-operating-system/

- https://en.wikipedia.org/wiki/Lock_(computer_science)

- https://dev.to/vivekyadav200988/understanding-blocking-and-non-blocking-sockets-in-c-programming-a-comprehensive-guide-2ien